Post-deployment data science has been on fire lately

Latest papers and content about post-deployment data science.

The world of post-deployment data science is more active than ever. In the last couple of weeks, there has been plenty of research in the space, such as quantifying uncertainty in classification, adapting LLMs to work under concept drift and conformal prediction under covariate shift.

At NannyML, we also have kept up. Just this week, the team published some great pieces.

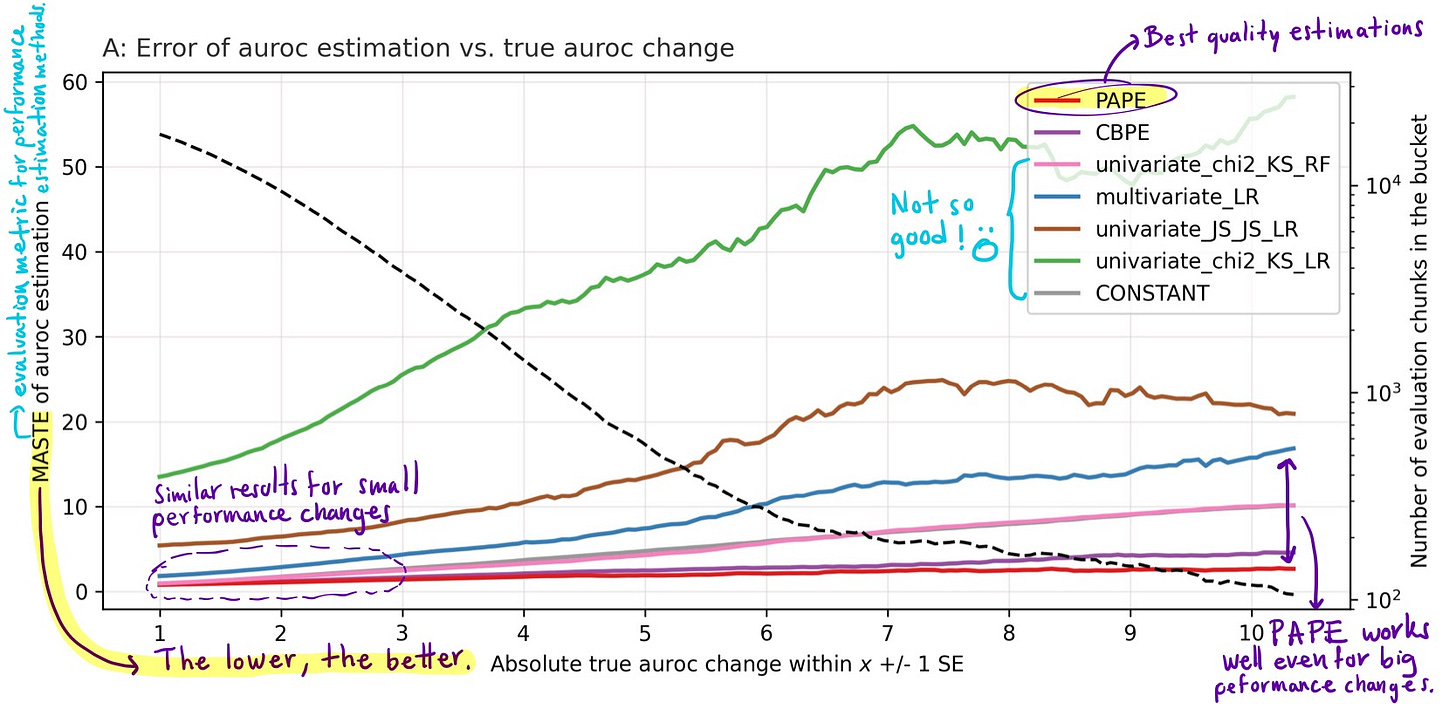

We ran 580 model-dataset experiments to show that, even if you try very hard, it is almost impossible to know that a model is degrading just by looking at data drift results.

Article: https://www.nannyml.com/blog/data-drift-estimate-model-performance

In our opinion, data drift detection methods are very useful when we want to understand what went wrong with a model, but they are not the right tools to know how my model's performance is doing. In this experiment, we show that even if you try very hard, it is almost impossible to know that a model is degrading just by looking at data drift results.

Using a classification model to detect multivariate data drift

Article: https://www.nannyml.com/blog/data-drift-domain-classifier

Miles wrote a great guide on how to use Domain Classifier, NannyML's latest data drift detection method.

Comparing multivariate data drift detection method

Article: https://www.nannyml.com/blog/tutorial-multivariate-drift-comparison

Now that NannyML supports two different multivariate data drift detection methods, Kavita addressed an inevitable question: What’s the difference between Data Reconstruction with PCA and the new method, Domain Classifier?

How to run a robust PoC to evaluate an ML monitoring tool

Article: https://www.nannyml.com/blog/evaluate-nannyml

For everybody looking into ML monitoring tools, we wrote a step-by-step guide on designing a proof-of-concept (PoC) project to evaluate if NannyML is the right tool for your use cases.

Papers that caught our eyes 👀

ROC Confidence Bands Using Conformal Prediction

Paper: https://arxiv.org/abs/2405.12953

Adapting multi-modal LLMs to Concept Drift

Paper: https://arxiv.org/abs/2405.13459

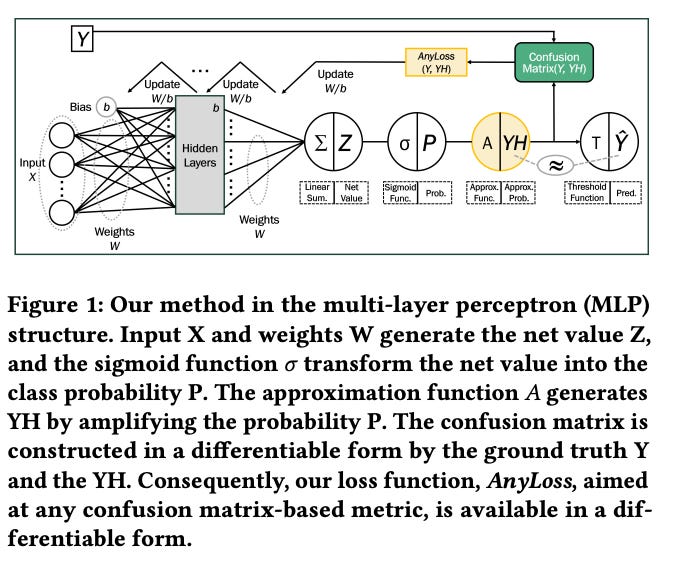

AnyLoss: Transforming Classification Metrics into Loss Functions

Paper: https://arxiv.org/abs/2405.14745

Shoutout to the community 💜

One of the great perks about building tools for data scientists is learning from them. Check out the comments on this post; it is full of great insights!

Thanks to Neil Leiser, for the shoutout on his podcast AI Stories Podcast 🫶

Check his latest episode, where he interviews the one and only Maria Vecthomova from

Want to see our latest algorithms in action?

Join Wiljan on 11th June to learn how advanced data science teams monitor their models.

Topic: Build or Buy: Should you pay to monitor your ML models?

Date: 11th June 2024, 12:30 pm CET

Link: Register for the event